Do you want to optimize your WordPress robots.txt file for better search engine rankings? If you are looking for a complete guide, keep reading this article.

When it comes to improving your site’s SEO, one often overlooked file is the WordPress robots.txt file. This simple, plain text file plays a powerful role in guiding search engine crawlers on how to interact with your website.

Most people either forget about the robots.txt file or don’t put that much effort into tweaking it.

To help you optimize your robots.txt file for search bots, I will cover the following in this tutorial:

- What the robots.txt file is

- Why is it important

- The main configurations

- How to optimize it for the best SEO practices

- Common mistakes you need to avoid while dealing with the robots.txt file

Before going into technical details, let’s see what the Robots.txt file is and why it is crucial.

☲ Table of Contents

- What is a Robots.txt File?

- Why WordPress Robots.txt Matters for SEO

- Default WordPress Robots.txt Configuration

- Common Robots.txt Rules and How to Use Them

- Recommended Robots.txt Example for WordPress (with explanation)

- 1. Block All Crawlers from the Entire Site

- 2. Block a Specific Directory (and Its Contents)

- 3. Allow Only a Specific Bot

- 4. Block Just One Bot, Allow Others

- 5. Block a Single Web Page

- 6. Allow Only a Subdirectory

- 7. Block a Specific Image from Google Images

- 8. Block All Images from Google Images

- 9. Block Specific File Types Using Wildcards for Pattern Matching

- 10. Block All Crawlers, But Allow Mediapartners-Google

- 11. Restrict All Bots

- 12. Block a Specific Bot from the Whole Site

- 13. Block Sensitive WordPress Directories

- 14. Block All Bots Except Google

- 15. Block Google Images from Uploads Directory

- How to Optimize the WordPress Robots.txt File for Better SEO

- How to Edit WordPress Robots.txt File

- How to Test Robots.txt File

- Common Mistakes to Avoid with WordPress Robots.txt

- Frequently Asked Questions

- What Is the WordPress Robots.txt File and Why Does It Matter?

- How to Edit the Robots.txt File in WordPress?

- Can I Block Search Engines from Indexing Certain Pages?

- What Should a Simple Robots.txt File Look Like?

- Will a Bad Robots.txt File Hurt My Search Rankings?

- Where Is the Robots.txt File Path in WordPress?

- Should I Use an SEO Plugin to Manage the Robots.txt File?

- Conclusion

What is a Robots.txt File?

A robots.txt is a plain text file in your website’s root folder that tells search engine crawlers which parts of the website they can or can’t access.

It acts as a set of instructions for bots like Googlebot, helping control the crawling process for better site performance and SEO. WordPress automatically creates a robots.txt virtual file until you edit it and place it in the root directory of your website.

Why WordPress Robots.txt Matters for SEO

The WordPress robots.txt file guides search engine crawlers on which parts of your site to access or ignore. This helps you control your crawl budget, ensuring that important pages like product listings or blog posts get crawled and indexed efficiently.

When search engines waste time crawling unnecessary admin pages or duplicate content, it can negatively impact your search performance.

A well-optimized robots.txt file can also help prevent duplicate content from being indexed, improving your site’s organic traffic over time. By focusing crawlers on your most valuable content, you can enhance search traffic and ensure your best pages have a stronger chance of ranking higher in search results.

Now you know why you should spend time tweaking the WordPress robots.txt settings.

Default WordPress Robots.txt Configuration

The default robots.txt file template would look like:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Common Robots.txt Rules and How to Use Them

In this section, we will show you some of the common robots.txt rules or directives you should know:

- User-agent: This specifies which search bots the rule applies to.

- Example:

User-agent: *— applies to all crawlers.

- Example:

- Disallow: Prevents search bots from accessing specific directories or pages.

- Example:

Disallow: /wp-admin/— blocks access to the WordPress admin area.

- Example:

- Allow: Overrides a Disallow for one particular path or file.

- Example:

Allow: /wp-admin/admin-ajax.php— allows access to a necessary file used by plugins and themes.

- Example:

- Sitemap: Helps search engines find your sitemap for better indexing.

- Example:

Sitemap: https://yourdomain.com/sitemap.xml

- Example:

- Crawl-delay: Tells search bots to wait before loading each page. Note: Google ignores this directive.

- Example:

Crawl-delay: 10— a 10-second delay between requests.

- Example:

To further optimize the search engine bot access, your crawl quota, and crawl traffic, you can explore more robots.txt rule blocks from Google and robotstxt.org.

Recommended Robots.txt Example for WordPress (with explanation)

Optimizing your WordPress site’s robots.txt file is a great way to guide search engines on what should and shouldn’t be crawled.

Here are some practical and commonly used robots.txt rules that you might find handy, along with explanations and best practices for each scenario.

1. Block All Crawlers from the Entire Site

This rule stops all bots from crawling your site. Just remember, URLs could still get indexed even if they’re not crawled. But some special bots like AdsBot need their own explicit rules.

User-agent: *

Disallow: /

2. Block a Specific Directory (and Its Contents)

Use this to prevent bots from accessing certain folders. It’s better to use authentication for private content, as robots.txt is publicly visible.

User-agent: *

Disallow: /downloads/

Disallow: /spam/

Disallow: /recipes/italian/pizza/

3. Allow Only a Specific Bot

If you want only Google News to crawl your site, here’s how:

User-agent: Googlebot-news

Allow: /

User-agent: *

Disallow: /

4. Block Just One Bot, Allow Others

Stop a single bot (like Unnecessarybot) while letting everyone else in:

User-agent: Unnecessarybot

Disallow: /

User-agent: *

Allow: /

5. Block a Single Web Page

Prevent bots from crawling specific files:

User-agent: *

Disallow: /unwanted_file.html

Disallow: /no-crawling/other_unwanted_file.html

6. Allow Only a Subdirectory

Block the entire site, but let bots into one public folder:

User-agent: *

Disallow: /

Allow: /public/

7. Block a Specific Image from Google Images

Great for keeping select images out of search results:

User-agent: Googlebot-Image

Disallow: /images/recipes.png

8. Block All Images from Google Images

Hide all your images from Google Images:

User-agent: Googlebot-Image

Disallow: /

9. Block Specific File Types Using Wildcards for Pattern Matching

You can block all files of a certain extension, such as GIFs or PDFs:

User-agent: Googlebot

Disallow: /*.gif$

Or for all bots and PDFs:

User-agent: *

Disallow: /*.pdf$

Or block all Excel files (.xls):

User-agent: Googlebot

Disallow: /*.xls$

Please note that Google supports wildcards, but not all bots do.

10. Block All Crawlers, But Allow Mediapartners-Google

Useful if you want AdSense ads to work but keep your content out of search results:

User-agent: *

Disallow: /

User-agent: Mediapartners-Google

Allow: /

11. Restrict All Bots

Sometimes used on staging or development sites:

User-agent: *

Disallow: /

12. Block a Specific Bot from the Whole Site

If you’re having trouble with a particular bad bot:

User-agent: BadBotName

Disallow: /

13. Block Sensitive WordPress Directories

A smart default for WordPress:

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-login.php

14. Block All Bots Except Google

Let only Google through; everyone else gets blocked:

User-agent: Google

Disallow:

User-agent: *

Disallow: /

15. Block Google Images from Uploads Directory

Block image crawling just in your uploads folder:

User-agent: Googlebot-Image

Disallow: /wp-content/uploads/

Combining different rules can get tricky, so consider using a WordPress plugin to help generate and manage your robots.txt file safely. Always test your robots.txt changes to ensure they work as intended!

Important: The robots.txt file only controls which URLs search engines can crawl; it does not prevent indexing of content that is linked elsewhere. To prevent indexing, use a noindex robots meta tag within the page’s HTML.

How to Optimize the WordPress Robots.txt File for Better SEO

In the syntax of the robots.txt file, a few crawler directives specify the action of a particular instruction. These crawler directives tell the search engine bots what they should do with different parts of your site.

Here are the everyday things you need to keep in mind for the recommended robots.txt for WordPress:

Do’s

- You should specify the sitemap URLs of your site.

- Be careful while choosing a folder to Disallow. As it may affect your Search Engine appearance.

- You should Disallow cloaking link folders like

/outor/recommendsin your root directory, if you have one. - You should Disallow HTML file in the root directory of your WordPress setup.

- As a widely accepted practice, you may also Disallow

/wp-content/plugins/folder as well to prevent crawling of unwanted plugin files.

Don’ts

- You should not use the Robots.txt file to prevent crawling of your low-quality content. When I say content, that refers particularly to the main content, i.e., article, blog post, images, etc., of your site. If you really want to noindex some of your content, then do it with the appropriate method. For instance, you can use the NoIndex tag provided in the SEO plugin on-page settings.

- You must not insert any comma, colon, or any other symbol that is not a part of the instruction.

- Do not add extra space between instructions unless it is done for a reason.

Note: The robots.txt file is publicly accessible at yourdomain.com/robots.txt. Do not use it to hide sensitive information, as anyone can view its contents.

Example of Optimized Robots.txt File

# Allow all bots access to everything except admin area

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

# Block plugins directory from crawling

Disallow: /wp-content/plugins/

# Provide sitemap location

Sitemap: https://yourdomain.com/sitemap_index.xml

Check how we optimized TechNumero’s Robots.txt file.

How to Edit WordPress Robots.txt File

You can edit your robots.txt file using the following methods:

- Via the cPanel

- Using an SEO plugin (like Yoast or Rank Math)

- Via an FTP application like FileZilla

How to Edit WordPress Robots.txt File via cPanel

If you have the cPanel access of your hosting, follow these steps:

- Open the cPanel and find the file manager.

- Open the file manager and go to the root directory.

- You can see the physical robots.txt file there.

- Now, right-click on it and select the editor.

- Make necessary changes and save the file.

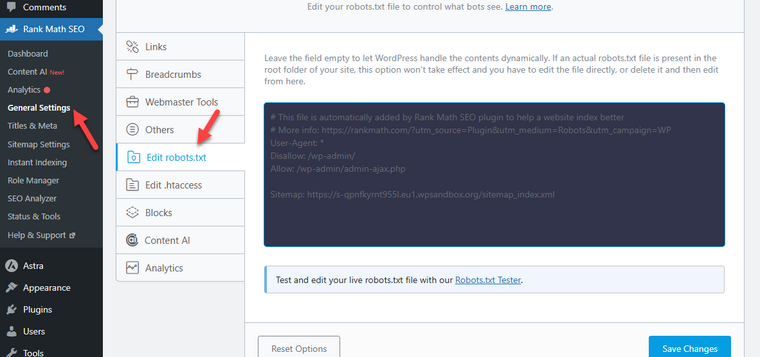

How to Edit WordPress Robots.txt File using Rank Math

If you are into the plugin method, follow these steps to edit Robots.txt with Rank Math:

- Go to Dashboard > Rank Math SEO > General Settings.

- Look for the Edit robots.txt tab to access the file editor.

- If you leave it empty, WordPress will handle the file contents. But in this case, we need to edit it. So, add the contents there and save the file.

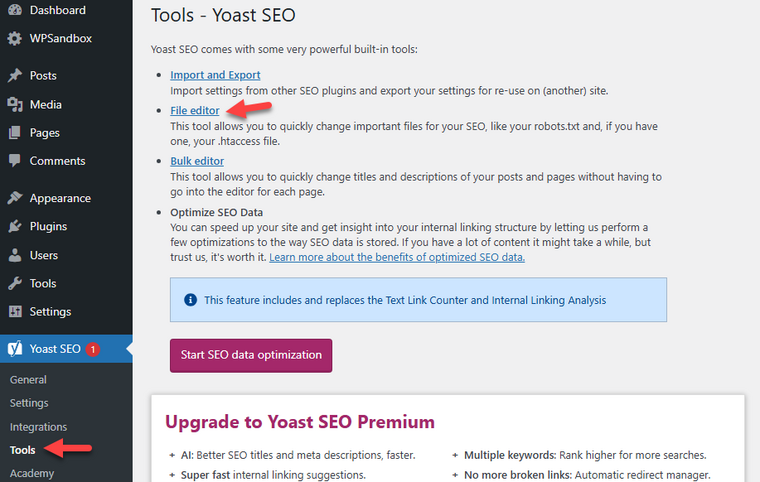

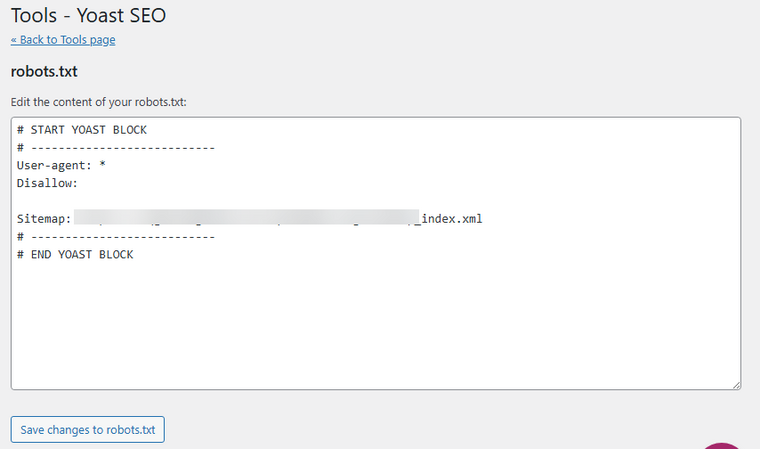

How to Edit WordPress Robots.txt File using Yoast

Now, if you are using Yoast SEO, follow these steps:

- Go to WP Dashboard > Yoast SEO > Tools > File Editor.

- There, you will see the robots.txt file. If you are not seeing one, use the WordPress robots.txt generator by Yoast.

- Modify the file there and save the changes. And that’s it.

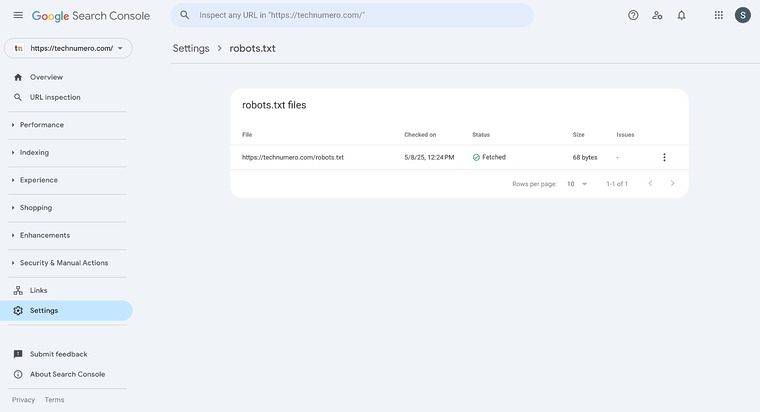

Always double-check after making edits. You can find robots.txt settings and issues in Google Search Console under Settings > robots.txt. Check more details below.

How to Test Robots.txt File

Testing your robots.txt file is crucial. A small mistake can accidentally block key content from search engines. Here’s how to make sure your file is working as intended.

Use Google Search Console Robots.txt Tester:

- Add your site to Google Search Console if you haven’t already.

- Go to Settings > Crawling > robots.txt and

- Now, click on the Open Report to open the robots.txt tester in Search Console and select your website.

- The tool will automatically load your robots.txt file and flag any errors or warnings.

You can also use other crawler tools like Screaming Frog to mimic how search engines view your site.

Common Mistakes to Avoid with WordPress Robots.txt

While tweaking the robots.txt file, here are the main mistakes you must avoid:

- No robots.txt file for subdomains

- Incorrect placement of Robots.txt file

- Blocking essential resources

- Not updating the robots.txt file after a major site update

- Not including a link to the sitemap

- Using conflicting rules

- Disallowing all bots unintentionally

Below, we will share what each mistake can cause and how you can avoid it.

1. No Robots.txt File for Subdomains

A common mistake is assuming that the main domain’s robots.txt file also controls subdomains.

In reality, search engines treat each subdomain as a separate site. Without a specific robots.txt file, subdomains like blog.yourdomain.com or shop.yourdomain.com may lack proper instructions for search engines.

This could result in unintended indexing by search engines of duplicate or sensitive content. To avoid this, upload a tailored robots.txt file to each subdomain’s root directory via your server via FTP. This ensures efficient crawl control and improves overall search performance.

2. Incorrect Placement of Robots.txt File

A commonly overlooked mistake is placing the robots.txt file in the wrong location. For the file to be effective, it must be located in your website’s root directory.

For example, yourdomain.com/robots.txt.

If it’s placed in a subfolder or elsewhere, search engine crawlers won’t be able to find it, and your instructions for search engines will be completely ignored.

This issue typically occurs when users upload the file via a server via FTP without confirming the correct file path. In some cases, SEO plugins may allow editing the file, but that doesn’t always guarantee the file is created in the proper directory if the site setup is complex.

When your robots.txt file isn’t correctly placed, it could result in poor search engine indexing, as your site won’t communicate which areas should be crawled or restricted.

Always double-check the file’s location and ensure it resides in the root of your WordPress installation.

3. Blocking Essential Resources

While tweaking the robots.txt file, ensure you are not blocking necessary files such as CSS, JS, or image files.

These assets play a critical role in how your website is displayed and functions for users and search engine crawlers.

When you block directories like /wp-content/ or /wp-includes/, you’re essentially preventing search engine crawlers from rendering your pages fully.

This makes it harder for them to understand your site layout, functionality, and even mobile responsiveness, ultimately affecting your visibility and indexing by search engines.

Keeping essential resources accessible, you help ensure search engines can crawl and evaluate your site correctly, improving your overall SEO performance.

4. Not Updating Robots.txt After Site Changes

If you redesign your site, switch themes, change URL paths, or move content into different folders, the existing robots.txt rules may no longer align with your current setup.

For example, a rule that disallows a now-deleted directory might remain in the robots.txt file.

Even worse, a rule that blocks staging or old theme directories might start affecting live content if the structure is reused.

This can lead to unintentional blocking of important content or failure to block sensitive areas, ultimately hurting your search engine visibility and confusing search engine crawlers.

To avoid this, always audit and revise your robots.txt file whenever you make structural updates to ensure it accurately reflects your site’s current layout and crawling preferences.

5. Not Including a Link to Your Sitemap

A sitemap provides a roadmap of your site’s structure, helping search engine bots discover all the essential pages.

When you don’t include your sitemap URL, crawlers might overlook deeper pages, affecting how thoroughly your site gets indexed. This can impact your search rankings, overall search engine optimization, and the presence in search engine results.

Here’s the correct format to add your sitemap to the robots.txt file:

Sitemap: https://yourdomain.com/sitemap_index.xml

Adding this line ensures efficient crawling by search engines like Google and Bing. It also complements other directives in the file, helping bots follow your access rules more intelligently.

Make sure the sitemap URL entered is accurate.

6. Using Conflicting Rules

Ensure there are no conflicting rules in the robots.txt file.

For example, you might allow access to a specific directory and then accidentally disallow a subdirectory or file within it, or vice versa.

These conflicting rules can confuse search engine crawlers and result in inconsistent indexing behavior. This can lead to important pages being blocked from indexing by search engines, even if you intended otherwise.

Clear, non-redundant instructions for search engines are key to ensuring your content is crawled efficiently.

Always review your robots.txt file to avoid sending mixed signals, especially if you manually edit the file via the server or FTP.

7. Disallowing All Bots Unintentionally

This is one of the worst things you can do.

User-agent: *

Disallow: /

This line tells all user agents, including Googlebot, Bingbot, and other search engine crawlers, to avoid crawling any page on the site.

As a result, your website could be removed entirely from search engine indexes, severely damaging your search engine visibility and organic traffic.

This mistake usually occurs when developers block crawling during a site’s development phase and forget to update the file before launch.

If this error is not caught early, it can negatively impact search engine rankings, and you may lose valuable indexing across your site.

To fix this, you must carefully review the file and use this snippet:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

These are some of the robots.txt best practices you can follow.

Frequently Asked Questions

Now, we will check some frequently asked questions regarding the topic.

What Is the WordPress Robots.txt File and Why Does It Matter?

The WordPress robots.txt file is a plain text file that gives instructions to search engine crawlers about which parts of your site to crawl or ignore. It’s crucial for efficient crawling and can impact your search engine optimization efforts.

How to Edit the Robots.txt File in WordPress?

You can edit the robots.txt file using an SEO plugin like Rank Math or the file manager in your hosting dashboard, or through a file editor if you have FTP access. This lets you set custom rules for search engine bots.

Can I Block Search Engines from Indexing Certain Pages?

Yes, you can block access of search engine crawlers from indexing specific folders like the admin folder or private areas by specifying disallow rules in your robots.txt. This helps manage search engine visibility effectively.

What Should a Simple Robots.txt File Look Like?

A simple robots.txt file might allow all user agents and block sensitive folders. For example, here is a WordPress robots.txt example:

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Will a Bad Robots.txt File Hurt My Search Rankings?

Yes, improper configuration can block important pages from being indexed, hurting your search rankings. Always double-check your rules in Google Search Console to ensure effective crawling and an optimized crawl rate.

Where Is the Robots.txt File Path in WordPress?

By default, WordPress generates a virtual robots.txt file located at the root of your domain (e.g., yourwebsite.com/robots.txt). You can override it with a physical file if you want custom rules.

Should I Use an SEO Plugin to Manage the Robots.txt File?

Yes, using an SEO plugin like Yoast or Rank Math simplifies editing your robots.txt file and ensures compatibility with other search engine optimization settings.

Conclusion

Optimizing your WordPress robots.txt file settings may seem like a small technical tweak, but it plays a big role in how efficiently search engine bots crawl your site.

A well-structured robots.txt file helps guide search engine crawlers, reduces unnecessary indexing, and improves overall search engine optimization.

As you have seen in this tutorial, the robots.txt file is located under the default public_html file path. You can tweak the file using a dedicated SEO plugin, FTP, or the control panel’s file editor.

In our case, we recommend WordPress robots.txt Rank Math editor to keep everything simple. If you are a Rank Math user, you can use that. Plus, use a robots.txt tester tool created by Rank Math to ensure the validation will return successfully.

However, you should also monitor the Google Search Console tool to ensure efficient crawling of the website.

Do you know any other methods to optimize the WordPress robot.txt file even further?

Let us know in the comments.

Nice to see your article about Robots.txt file…. Hopefully this post will be helpful for all who wants the better result from search engines.

Hey Niraj,

Glad you find it useful.

Thanks for stopping by.

Thanks bro great tips.